Launching Unknown Container: Practical Guide to Variable Analysis using Azure AI Translator Container

Containers have now spread across the entire digital world, from application development to infrastructure deployment, with ready-to-use container images prepared by many contributors.

If you get a container image but the documentation happens to be not particularly detailed, how should I efficiently conduct a vairable analysis of this container image to ensure a smooth start up?

I used the Azure AI Translator's container image as an example; you should be able to download it and try it out yourselves.

mcr.microsoft.com/azure-cognitive-services/translator/text-translation:latest

And to ensure to a quick reproduce, I will write the docker-compose.yaml to ensure alignment in understanding.

Inspect it

The first step is to directly use inspect. Here, you can use either docker inspect or podman inspect; as long as the OCI specificaiton is followed, the method of inquiry is not restricted.

$ docker inspect mcr.microsoft.com/azure-cognitive-services/translator/text-translation:latest

[

{

"Config": {

"User": "nonroot",

"ExposedPorts": {

"5000/tcp": {}

},

"Env": [

"PATH=/opt/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"ENVIRONMENT=onprem",

"SERVICE=texttranslation",

"MODEL_PATH=/usr/local/models",

"MODEL_DEBUG_PATH=/usr/local/debug",

"LD_LIBRARY_PATH=/app",

"ASPNETCORE_URLS=http://*:5000"

],

"Entrypoint": [

"/usr/sbin/busybox",

"sh"

],

"Cmd": [

"-c",

"./start_service.sh"

],

"WorkingDir": "/app",

"Labels": {

"com.visualstudio.machinetranslation.image.build.buildnumber": "1.0.03049.787",

"com.visualstudio.machinetranslation.image.build.builduri": "vstfs:///Build/Build/316441",

"com.visualstudio.machinetranslation.image.build.definitionname": "OneBranch-mt-api-frontend-OnPrem-Official",

"com.visualstudio.machinetranslation.image.build.repository.name": "mt-api-frontend",

"com.visualstudio.machinetranslation.image.build.repository.uri": "https://machinetranslation.visualstudio.com/MachineTranslation/_git/mt-api-frontend",

"com.visualstudio.machinetranslation.image.build.sourcebranchname": "master",

"com.visualstudio.machinetranslation.image.build.sourceversion": "5fd62596eaa10a9cb2719864c8751cebcbed9789",

"com.visualstudio.machinetranslation.image.system.teamfoundationcollectionuri": "https://machinetranslation.visualstudio.com/",

"com.visualstudio.machinetranslation.image.system.teamproject": "MachineTranslation",

"image.base.digest": "sha256:bf86bdf8eadf0bfcbfab3011a260d694428af66ed8c22e2e54aa32a99b93a8e3",

"image.base.ref.name": "mcr.microsoft.com/cbl-mariner/distroless/base:2.0"

},

"ArgsEscaped": true

},

}

]Most of the answers you need will be written in the .Config field of docker inspect.

Now, you already know the following information:

- Base Image: mcr.microsoft.com/cbl-mariner/distroless/base:2.0

- ExposePort: 5000/tcp

- User: nonroot

- Entrypoint: busybox

- Cmd: ./start_service.sh

- Env: dadada...

---

services:

azure-ai-translator:

image: mcr.microsoft.com/azure-cognitive-services/translator/text-translation:latest

container_name: azure-ai-translator

user: "nonroot"

expose:

- "5000"

ports:

- "5000:5000"

environment:

- ENVIRONMENT=onprem

- SERVICE=texttranslation

- MODEL_PATH=/usr/local/models

- MODEL_DEBUG_PATH=/usr/local/debug

- LD_LIBRARY_PATH=/app

- ASPNETCORE_URLS=http://*:5000

entrypoint:

- /usr/sbin/busybox

- sh

command:

- -c

- ./start_service.sh

working_dir: /app

# volumes:

# - ./your_local_models_path:/usr/local/models

docker-compose.yaml

But clearly, those informations we have are not enough to make Azure AI Translator run very well. We need to run it and take a took at the contents of start_service.sh

Run it

Inside this, there is a startup script that include quite a lot of environments waiting for you to provide to it. Due to words limitations, I won't list them all here.

#!/bin/bash

docker run --rm mcr.microsoft.com/azure-cognitive-services/translator/text-translation:latest -c "cat ./start_service.sh"cat-start_service.sh

The following docker-compose.yaml should be the content you can understand from start_service.sh. In fact, you will find the most of the time is spent figuring out which environment variables to pass to the container to ensure it operates correctly.

services:

azure-ai-translator:

image: mcr.microsoft.com/azure-cognitive-services/translator/text-translation:latest

user: "nonroot"

container_name: azure-ai-translator

expose:

- "5000"

ports:

- "5000:5000"

environment:

# Azure AI Translator settings

EULA: accept # (REQUIRE)

APIKEY: ${TRANSLATOR_KEY} # (REQUIRE)

BILLING: ${TRANSLATOR_ENDPOINT_URI} # (REQUIRE)

LANGUAGES: zh-Hant,zh-Hans,en,ja,id,es,fr,ru,vi,it,pt,de,ko,tr,da,he,fa,ms,th,fil,ar # (REQUIRE)

MODEL_PATH: /usr/local/models # (REQUIRE) Path to language models, default is /usr/local/models

HOTFIXDATAFOLDER: "" # (Optional) Specify path if using Hotfix feature, leave empty by default

GENERATEHOTFIXTEMPLATE: "false" # (Optional) Set to true if you need to generate Hotfix template and specify HOTFIXDATAFOLDER, default is false

DOWNLOADLICENSE: "false" # (Optional) Set to true if you only want to download license file without starting the service, default is false

Mounts:License: "" # (Optional) Specify path if using License feature, leave empty by default

CATEGORIES: "" # (Optional) Specify model categories if needed, leave empty by default

MODSENVIRONMENT: "" # (Optional) Specify MoDS endpoint if using MoDS service, leave empty by default

MODELS: "" # (Optional) Leave empty by default

TRANSLATORSYSTEMCONFIG: "" # (Optional) Leave empty by default

# .Net Core settings

Mounts:Output: /logs # (Optional) But there is no log in the container

# volumes:

# - ./your_local_models_path:/usr/local/modelsdocker-compose.yaml

Up to this point, you should be able to run the container normally, but does that mean there are no issues? Not quite. How can I correctly mount a folder into it? If I want to mount the data stored inside the container (e.g. /usr/local/models) to an external folder (./your_local_models_path) without modifying the existing permission, how should I do?

Find it

If you have successfully executed the container, you can directly use `docker exec` to log into the running container and execute `id nonroot` to check the UID and GID

$ docker exec <container_name_or_id> id nonroot

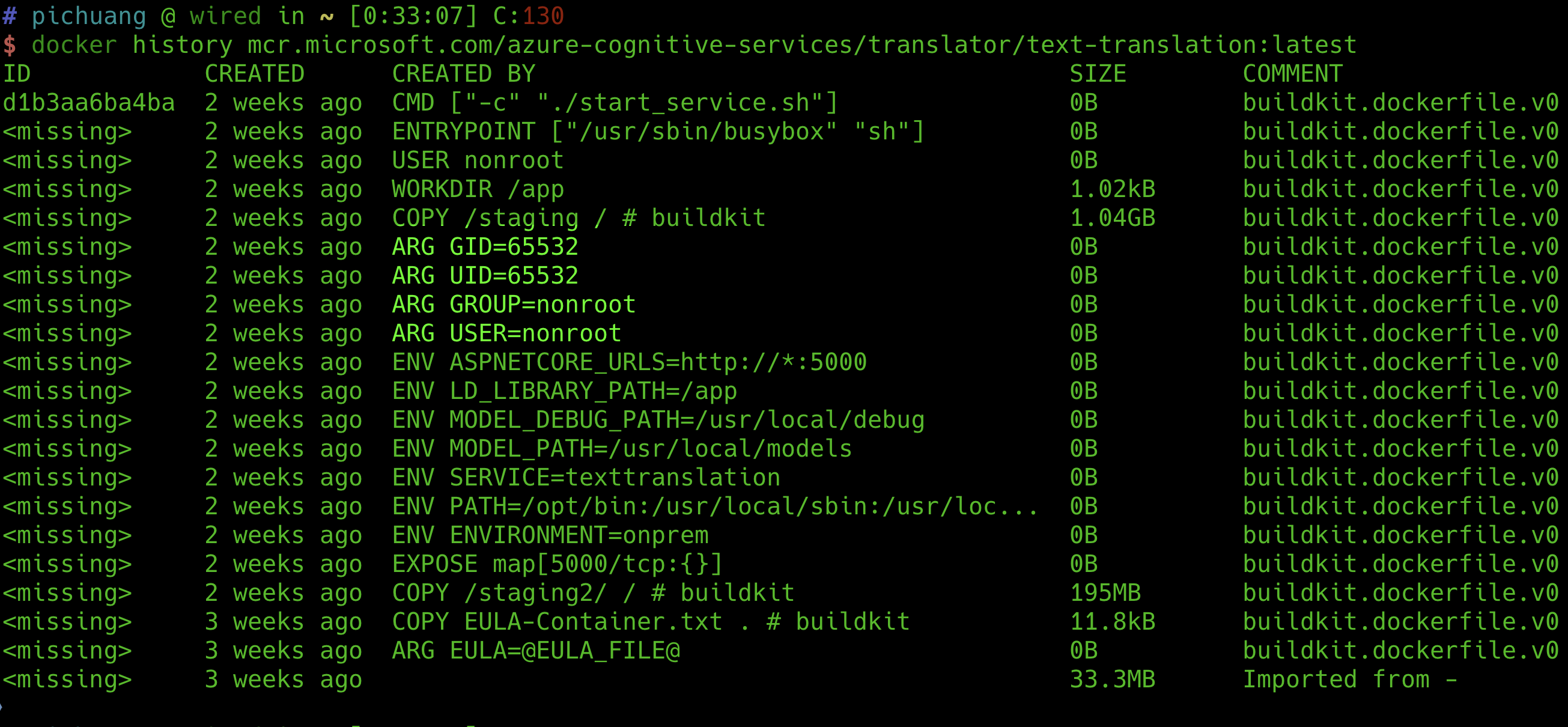

uid=65532(nonroot) gid=65532(nonroot) groups=65532(nonroot)Or you can use docker history to find some clues. Most of the permission informaion will be written in the Dockerfile, and you can use this command to understand the compliation process.

#!/bin/bash

docker history mcr.microsoft.com/azure-cognitive-services/translator/text-translation:latest

Due to the nonroot (65532) user being defined inside the container, it does not have the permissions to write to the folder when mapped to the external host. Therefore, when you wan to sue volumes to mount a folder, you need to first change the permissions of that folder.

#!/bin/bash

# Create folder to store the modeles

mkdir -p ./azure-ai-translator/models

# (Require) Allow nonroot inside the container to correctly write data to this folder

sudo chown -R 65532:65532 ./azure-ai-translator

# (Optional) Normally, the UID you personally use would not be 65532. If you want to write data inside, you need o+w.

sudo chmod -R o+w ./azure-ai-translatorcreate-directory.sh

Then we will modify the docker-compose.yaml

services:

azure-ai-translator:

image: mcr.microsoft.com/azure-cognitive-services/translator/text-translation:latest

user: "nonroot"

container_name: azure-ai-translator

expose:

- "5000"

ports:

- "5000:5000"

environment:

# Azure AI Translator settings

EULA: accept # (REQUIRE)

APIKEY: ${TRANSLATOR_KEY} # (REQUIRE)

BILLING: ${TRANSLATOR_ENDPOINT_URI} # (REQUIRE)

LANGUAGES: zh-Hant,zh-Hans,en,ja,id,es,fr,ru,vi,it,pt,de,ko,tr,da,he,fa,ms,th,fil,ar # (REQUIRE)

MODEL_PATH: /usr/local/models # (REQUIRE) Path to language models, default is /usr/local/models

HOTFIXDATAFOLDER: "" # (Optional) Specify path if using Hotfix feature, leave empty by default

GENERATEHOTFIXTEMPLATE: "false" # (Optional) Set to true if you need to generate Hotfix template and specify HOTFIXDATAFOLDER, default is false

DOWNLOADLICENSE: "false" # (Optional) Set to true if you only want to download license file without starting the service, default is false

Mounts:License: "" # (Optional) Specify path if using License feature, leave empty by default

CATEGORIES: "" # (Optional) Specify model categories if needed, leave empty by default

MODSENVIRONMENT: "" # (Optional) Specify MoDS endpoint if using MoDS service, leave empty by default

MODELS: "" # (Optional) Leave empty by default

TRANSLATORSYSTEMCONFIG: "" # (Optional) Leave empty by default

# .Net Core settings

Mounts:Output: /logs # (Optional) But there is no log in the container

volumes:

- ./azure-ai-translator/models:/usr/local/modelsBy this point, you should be able to correctly run this initially unknown container and write data into it normally.